When it comes to text generation, there’re always so many questions about it:

- Where’s the word come from?

- Does the model learn languages as a child do?

Characteristics of the text

- Text data has a time dimension. The order of words is highly important.

- Text or language is rule-based, which we call grammar.

- Text data is discrete, characters or words are not continuous, which makes it hard to represent the data compared with image pixels.

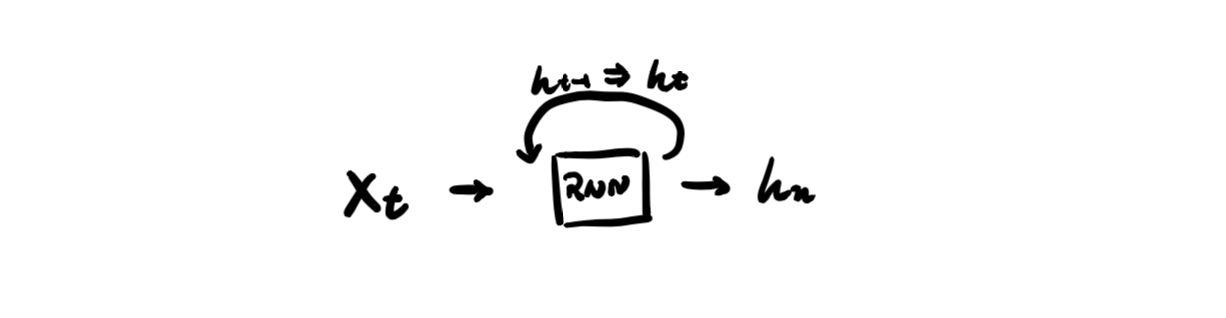

RNN

RNN is a typical example of autoregression models, which use observations from previous time steps as input to a regression equation to predict the value at the next time step.

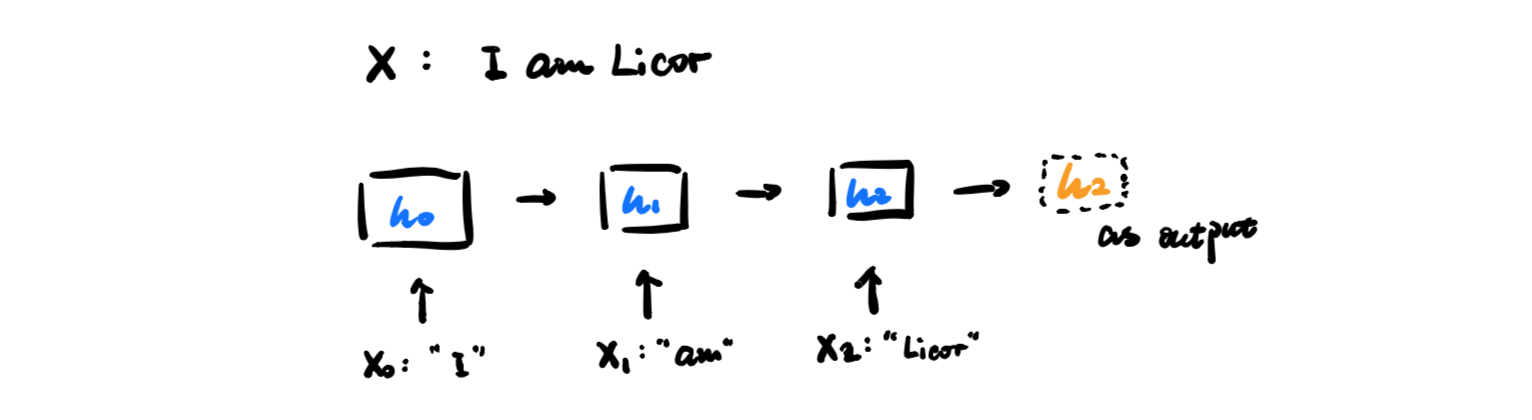

We can see more clearly if we unroll the RNN. Given an input, the hidden state(units of weights) in the RNN cell updates its weights with some update function. Then we feed the next input and the weights get updated again. So the hidden state is constantly updated as the input flows in. The output of this RNN is the final hidden state.

Words, words, words

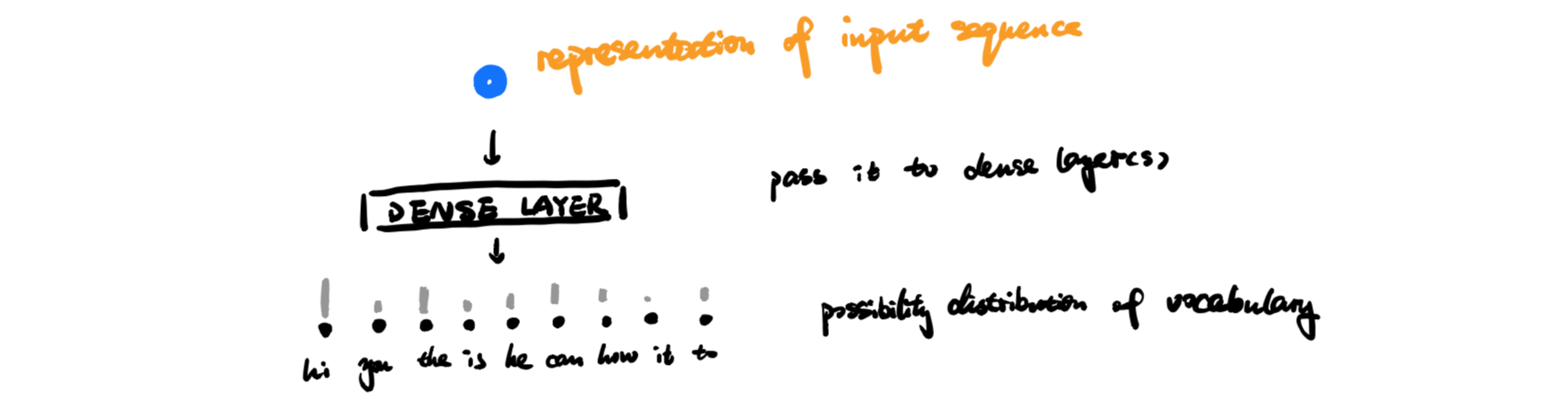

As illustrated above, we can use RNN’s final hidden state as the representation of our input sequence. To predict the next word, a vocabulary is needed.

Similar to image classification, which given a representation of image, the model calculates a distribution of possibilities of different categories and the regards the highest one as the final prediction, text generation model output a possibility of every word in the whole vocabulary.

To generate longer sequence, just append this prediction to the input sequence and repeat this process.

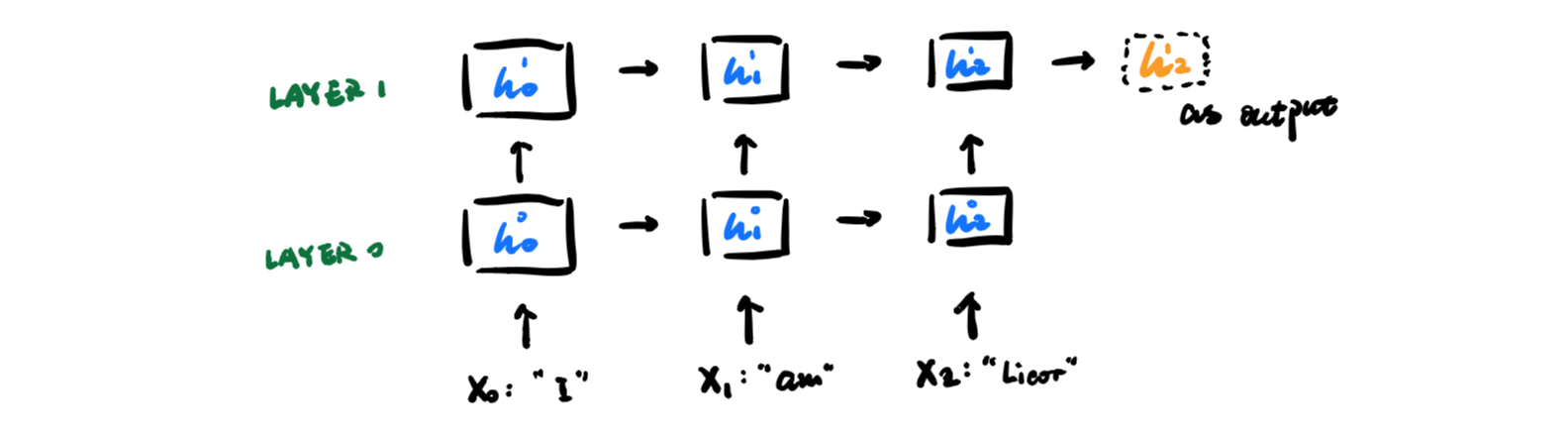

Stacked RNN

Just one layer seems not enough for deep learning literally. To add more layers, we need hidden state from every timestep, not just the final one. The second layer receives the hidden state from the previous layer as its own input:

Text generation with Transformer

Transformer performs the attention calculation in parallel, explained in Transformer: successor of RNN. This makes the text representation faster and better compared with RNN. But the rest process is almost identical: take this representation and guess the next word.

Deal with unknown words

It is very frustrating that we always have a fixed vocabulary in NLP tasks, which means we can neither understand out-of-vocabulary(OOV) words nor generate novel words. Rather than just removing it or replace it with a special token, we can use some alternative solutions:

split it

character and n-gram

A simple way is splitting the word into letters or n-grams of those letters. But this cannot preserve the semantic meaning related to this word.morpheme and subword

A word can be decomposed into multiple morphemes from a linguistic view. So decomposing it into morphemes makes it possible to infer its meanings from the common subwords.

update vocabulary and embedding

Another way is to update the vocabulary once new word appears, as well as to initialize its embedding with the average of surrounding words’ embeddings.